3 days trial then $20.00/month - No credit card required now

This Actor may be unreliable while under maintenance. Would you like to try a similar Actor instead?

See alternative ActorsKeboola Uploader

3 days trial then $20.00/month - No credit card required now

Reliable uploader of Apify Datasets to Keboola Connection (aka KBC). Integration-ready.

Reliable uploader of Apify Datasets to Keboola Connection. We are using Storage API Importer with optimal defaults. This actor is helpful in workflows or for ad-hoc data uploads.

This actor is generalisation of our custom-made uploaders for many of our projects. It uses minimum dependencies and optimizes for speed and reliability.

- gracefully handles migrations

- implements retry policy for failed uploads

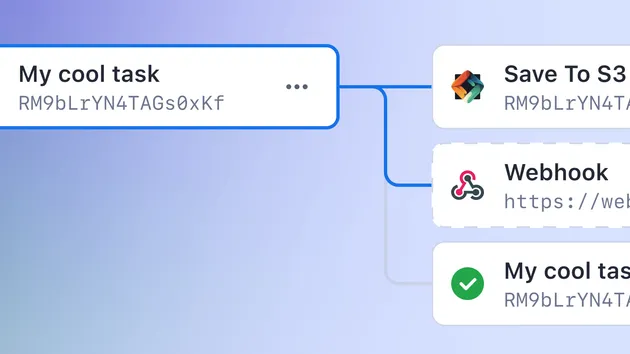

- supports Actor Integration

- allows to fine tune the batch size for you optimal usage of resources

Your Apify Dataset will be split into batches, converted to CSV and uploaded with gzip compression enabled.

You should choose the batchSize according to the nature of you data. Primitive properties from your Dataset will

be 1:1 mapped to CSV table. Complex properties (arrays and objects) will be serialized to JSON, so you can use Snowflake support

for JSON in your transformations.

Inputs

Dataset ID

ID of Apify Dataset that should be uploaded to Keboola. When you use this actor in Integrations workflow, this field is optional. Default Dataset of previous actor in the flow will be used.

Keboola Stack

Hostname of your Keboola stack import endpoint. See Keboola documentations

for more details. Default is import.keboola.com for AWS US-East region.

You can alternatively set KEBOOLA_STACK environment variable instead.

Current multi-tenant stacks are:

| region | hostname |

|---|---|

| US Virginia AWS | import.keboola.com |

| US Virginia GCP | import.us-east4.gcp.keboola.com |

| EU Frankfurt AWS | import.eu-central-1.keboola.com |

| EU Ireland Azure | import.north-europe.azure.keboola.com |

| EU Frankfurt GCP | import.europe-west3.gcp.keboola.com |

If you are single tenant user then your hostname is in format import.CUSTOMER_NAME.keboola.com.

Keboola Storage API Key

Your API Key to Keboola project where you want to upload the data.

You should generate new API key just for this actor with limited rights to write only to destination bucket.

You can alternatively set KEBOOLA_STORAGE_API_KEY environment variable instead.

Bucket

Name of the destination Keboola bucket. eg. in.c-apify

Table

Name of the destination Keboola table. eg. scrape_results

Headers

Array of header names of destination Keboola table.

You can use this to select subset of properties to result table or to reorder the columns - the order of headers is preserved in result table.

You can leave it blank if your Dataset items have all properties always specified (without undefined values).

In this case properties of the first Dataset item are used.

Our recommendation is to be explicit to prevent unexpected data loss.

Batch Size

Size of the batch to upload. Dataset will be split into more batches if it has more items that this number. Batches will be uploaded sequentially. Choose the batch size according to the nature of you data and parallelization of you process. Generally speaking, Keboola Importer works best if you send less frequent bigger portions (dozens of MB gzipped) of data. On the other side you are constrained by the Actor size. You can easily hit OOM condition when this number is too high.

Incremental load

When enabled, imported data will be added to the existing table.

When disabled, table will be truncated - all existing data will be deleted from the table.

Default is enabled (true).

- 1 monthly users

- 33.3% runs succeeded

- days response time

- Created in May 2024

- Modified about 6 hours ago

hckr.studio

hckr.studio